The future of film hinges on the fusion of artistry and automation. Autonomous camera rigs are reshaping modern production by offering filmmakers unprecedented precision, repeatability, and creative freedom. These intelligent systems leverage advanced sensors, on-board computing, and machine learning to plan complex moves, avoid obstacles in real time, and integrate seamlessly with both physical and virtual production workflows. In this article, we delve into the core technologies and design philosophies driving this revolution and how they promise to redefine what is possible on set.

In this article, we will explore:

| Table of Contents | |

|---|---|

| I. | AI-Driven Path Planning & Predictive Motion Control |

| II. | Multi-Axis Synchronization for True 6-Degree-of-Freedom Precision |

| III. | Real-Time Environmental Mapping & Dynamic Obstacle Avoidance |

| IV. | Sensor Fusion: Lidar, IMU & Machine-Vision Integration |

| V. | Edge-AI Architectures for Low-Latency On-Board Processing |

| VI. | High-Dynamic-Range Control Systems & Actuator Thermal Management |

| VII. | Modular Rig Design: Scalability Across Studio, Field & Aerial Shoots |

| VIII. | Seamless Virtual Production: Syncing Rigs with Unreal Engine Pipelines |

| IX. | Data-Driven Workflow Automation: From Previs to Live Overrides |

| X. | Cloud-Based Fleet Orchestration & Remote Diagnostics |

| XI. | Regulatory Compliance & On-Set Safety Protocols for Autonomous Systems |

| XII. | Continuous Learning & Firmware Updates: Future-Proofing Autonomous Rigs |

AI-Driven Path Planning & Predictive Motion Control

Modern autonomous rigs use machine learning algorithms to map out complex camera trajectories before the first take. By analyzing shot requirements, speed, framing changes, and actor positions, an AI engine predicts optimal paths that blend smooth motion with dynamic responsiveness. This precomputed planning minimizes setup time and reduces human error. During shooting, the rig continuously refines its motion plans in milliseconds, adapting on the fly if an actor strays from their mark or lighting conditions shift. The result is a perfectly choreographed move every time, unlocking cinematic possibilities once reserved for big-budget blockbusters.

Multi-Axis Synchronization for True 6-Degree-of-Freedom Precision

Cinema demands movement along three translational axes (X, Y, Z) and three rotational axes (pitch, yaw, roll). Autonomous rigs coordinate all six degrees of freedom simultaneously, ensuring each axis accelerates, decelerates, and stops in perfect harmony. Advanced motion controllers issue split-second commands to each motor, eliminating jitter, overshoot, or lag. This multi-axis synchronization lets filmmakers execute sweeping crane-like arcs, intricate dolly moves, and gravity-defying spins, all with sub-millimeter accuracy. Whether capturing a high-speed chase or a delicate dialogue scene, precision synchronization guarantees repeatability across multiple takes.

Real-Time Environmental Mapping & Dynamic Obstacle Avoidance

Gone are the days of blind rails and manual safety checks. Autonomous rigs employ depth cameras and Lidar to build a live 3D map of their surroundings. As the environment changes, crew members crossing paths, boom poles entering the frame, or set pieces shifting, the rig’s navigation system recalculates safe corridors in real time. Onboard collision-detection algorithms apply dynamic brakes or reroute the camera smoothly around obstacles without jarring the shot. This continuous situational awareness enhances on-set safety and allows operations in confined or crowded spaces that would otherwise be off-limits to traditional gear.

Sensor Fusion: Lidar, IMU & Machine-Vision Integration

No single sensor can deliver total certainty, so autonomous rigs blend data from multiple sources. Lidar provides accurate distance readings, while an internal IMU (Inertial Measurement Unit) tracks acceleration and orientation at high frequency. Meanwhile, high-resolution cameras feed visual odometry into neural networks that recognize landmarks and maintain absolute position. By fusing these inputs, the rig achieves robust tracking even under poor lighting or reflective surfaces. Sensor fusion smooths out noise, compensates for individual sensor drift, and guarantees consistent performance across diverse shooting environments.

Edge-AI Architectures for Low-Latency On-Board Processing

Latency is the enemy of real-time control. To avoid delays caused by remote servers, autonomous rigs are outfitted with powerful edge-AI modules, compact GPUs or specialized neural processors built into the camera head itself. These processors run complex computer-vision and planning algorithms on the rig, ensuring decision loops complete within a few milliseconds. With all critical computations handled locally, the system can react instantly to changes in subject movement or unexpected set conditions, maintaining a seamless experience for actors and cinematographers alike.

High-Dynamic-Range Control Systems & Actuator Thermal Management

High-performance actuators generate heat under sustained loads, risking thermal throttling or failure mid-shoot. To tackle this, modern rigs leverage high-dynamic-range (HDR) control electronics that regulate power delivery precisely, minimizing excessive currents during acceleration peaks. Coupled with active cooling, heat pipes, micro-fans, or phase-change materials, the system maintains optimal motor and driver temperatures. This thermal management extends filming durations and preserves motion fidelity, especially during demanding scenes like long takes or rapid action sequences.

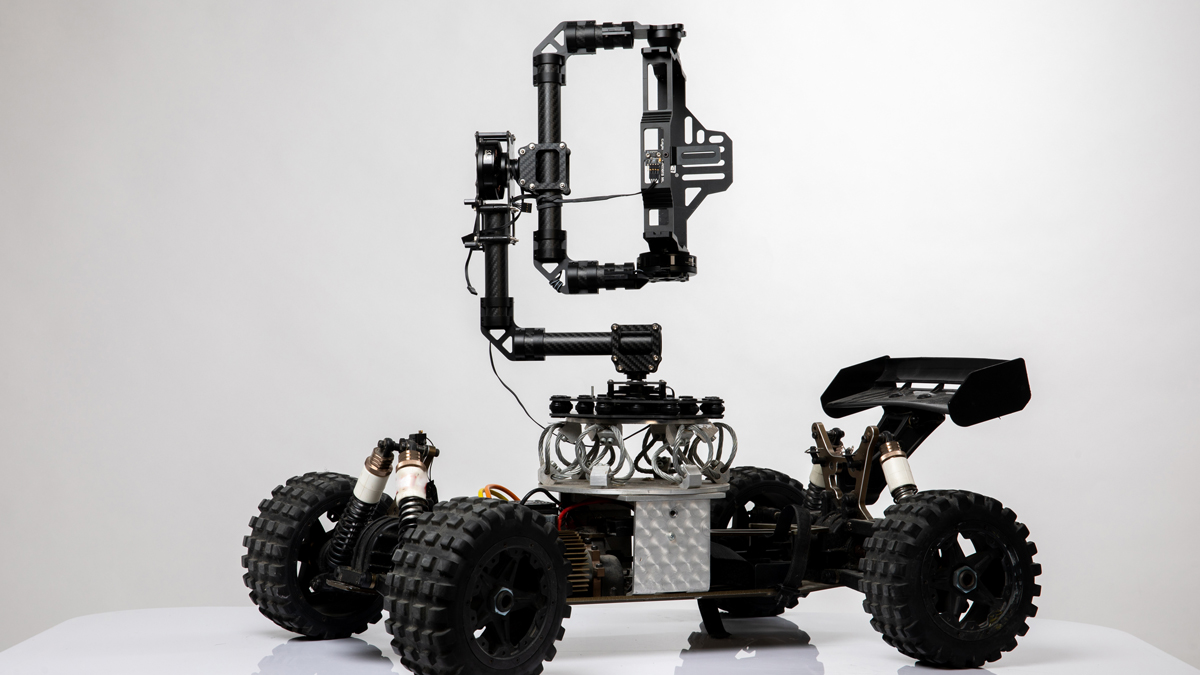

Modular Rig Design: Scalability Across Studio, Field & Aerial Shoots

Versatility is key in today’s production landscape. Modular rig architectures allow filmmakers to swap cores, studio dollies, outdoor track units, or drone-compatible gimbals, while reusing the same control modules and software stack. Quick-release mounts, standardized power and data connectors, and adjustable booms transform a single base unit into multiple specialized configurations. This scalability drives down equipment costs, simplifies logistics, and ensures crews can adapt swiftly from a controlled studio environment to rugged outdoor or aerial shooting scenarios.

Seamless Virtual Production: Syncing Rigs with Unreal Engine Pipelines

Virtual sets and LED volumes are now mainstays of modern filmmaking. Autonomous rigs integrate natively with real-time engines like Unreal Engine, sharing positional data via OSC or SDI protocols. As the virtual background shifts in response to camera moves, the system synchronizes frame-by-frame, preserving accurate parallax and lighting cues. Cinematographers can preview composite shots live, adjust rig movements within the virtual environment, and ensure that physical and digital elements blend flawlessly, streamlining post-production and enriching on-set creativity.

Data-Driven Workflow Automation: From Previs to Live Overrides

Data collected during previsualization (previs) phases, camera angles, motion curves, and timing, feeds directly into autonomous rigs. By importing previs files, rigs automatically execute complex sequences without manual programming. Cinematographers retain ultimate control, however: live overrides via touchscreen or joystick can tweak speed, adjust framing, or interrupt a sequence entirely. These dual modes, automated playback vs. manual intervention, combine efficiency with flexibility, reducing setup times and empowering creatives to experiment spontaneously on set.s

Cloud-Based Fleet Orchestration & Remote Diagnostics

Large-scale productions often deploy multiple rigs across different stages or locations. Cloud-based orchestration platforms unify all units under a single dashboard, allowing supervisors to schedule shots, push software updates, and monitor health metrics remotely. Automated diagnostics alert technicians to potential issues motor wear, sensor misalignment, or battery degradation, before they cause downtime. Remote logging and analytics also help optimally allocate resources, plan maintenance windows around shooting schedules, and ensure every rig is mission-ready at a moment’s notice.

Regulatory Compliance & On-Set Safety Protocols for Autonomous Systems

Introducing autonomous machinery on set requires rigorous adherence to safety standards. Manufacturers build in redundant fail-safe circuits, emergency-stop buttons, and physical bumpers. Systems perform self-checks at startup, verify actuation limits, and enforce geofenced boundaries to prevent unintended travel. Safety officers must train crews on operation protocols, emergency procedures, and regulatory guidelines, such as those from the Occupational Safety and Health Administration (OSHA) or local film commission rules. Clear documentation and routine audits ensure that innovation never comes at the cost of on-set wellbeing.

Continuous Learning & Firmware Updates: Future-Proofing Autonomous Rigs

The rise of autonomous camera rigs is just the beginning. Through over-the-air (OTA) firmware updates, rigs can receive new features improved AI planners, enhanced sensor algorithms, or streamlined user interfaces without swapping hardware. Some platforms even support federated learning, where anonymized performance data from multiple productions help refine motion models globally. This continuous-learning approach keeps rigs at the cutting edge, enabling filmmakers to tackle new creative challenges and ensuring that today’s investment remains valuable in tomorrow’s rapidly evolving production landscape.